Hollywood's Fear of AI and the Revolutionary Diff2Lip Technology

Written on

Chapter 1: The Fear and Fascination with AI

Hollywood's apprehension towards AI is palpable, as this technology poses a potential threat to its established norms. However, a groundbreaking AI innovation has emerged that even the film industry can't resist: an AI capable of allowing actors to speak in a myriad of languages.

Introducing Diff2Lip, a model that revolutionizes lip synchronization and could significantly impact not only the film industry but also the lives of those with speech disabilities.

If you're keen on staying ahead in the rapidly evolving AI landscape and wish to be inspired to take action, consider subscribing to my free weekly newsletter for exclusive insights not available elsewhere.

TheTechOasis

The newsletter to stay ahead of the curve in AI

thetechoasis.beehiiv.com

Chapter 2: A Multilingual Actress

In 2019, the South Korean film "Parasite" made history by being the first non-English film to clinch the Oscar for Best Picture. Audiences globally embraced the film in its original Korean language, showcasing that a powerful story transcends language barriers.

While modern films often offer dubbed versions, the experience can feel disconnected as the actors' lip movements do not match the translated dialogue. Picture the immersive experience of watching "Parasite" or "Squid Game" where the actors genuinely appear to be speaking in your native language—this is what Diff2Lip delivers.

Section 2.1: Understanding Diff2Lip's Functionality

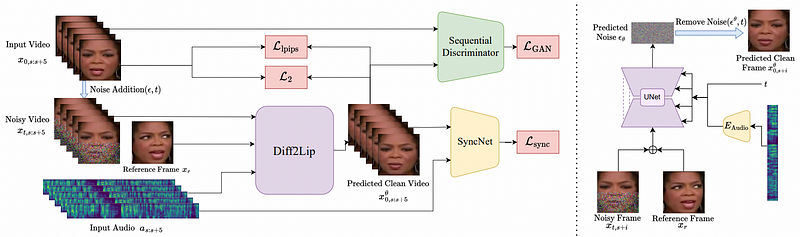

The primary aim of Diff2Lip is quite clear. It works by masking the lower portion of an actor's face and reconstructing it to synchronize lip movements with new audio. This technique, known as inpainting, is akin to restoration work in the arts.

For a clearer understanding, check out these brief videos showcasing this technology in action.

Section 2.2: The Science Behind Diff2Lip

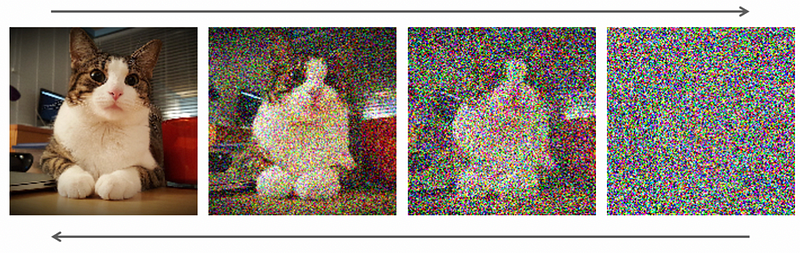

Diff2Lip utilizes a technique called 'Diffusion,' similar to that used in image generators like Dall-E. This process takes an image filled with random data and gradually refines it into a new, coherent image through a denoising process.

In simpler terms, the model begins with a noisy image and, through a series of steps, eliminates the noise until the desired image emerges based on specific conditions.

The condition for Diff2Lip, in this case, is ensuring that the video frames align perfectly with the new audio track, thus creating a seamless viewing experience.

Section 2.3: The Technical Framework

Despite its sophisticated architecture, Diff2Lip's underlying concept is straightforward. Neural networks are trained to perform specific tasks while minimizing errors between predicted outcomes and actual results.

The model faces the challenge of ensuring:

- High-quality video output.

- Perfect synchronization with the new audio.

- Consistency across all frames in the video.

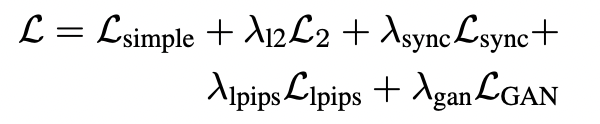

To tackle this, Diff2Lip incorporates multiple loss functions that work simultaneously to refine its outputs.

Chapter 3: The Broad Applications of Diff2Lip

The implications of Diff2Lip extend well beyond Hollywood. Various industries stand to benefit significantly from this technology:

- Entertainment: Enhancing dubbing accuracy in films and creating realistic lip movements in animations.

- Virtual Communication: Improving video conferencing quality by synchronizing audio and video in real-time.

- Accessibility: Aiding those with hearing impairments through accurate lip synchronization.

- Education: Developing language learning tools that visually demonstrate pronunciation.

- Healthcare: Supporting speech therapy with avatars that illustrate proper mouth movements.

Section 3.1: A New Dawn for AI

Diff2Lip represents a pivotal shift in AI technology, prioritizing advancements that enhance the quality of life for a broader audience rather than just a select few.

As we embrace this innovation, it's clear that AI's true potential lies in its ability to empower and uplift society as a whole.